We're Tech Experts. Here's What You Should Know Before Trusting Google's AI Overview.

Sydni Ellis 10/06/2025 10:30 111

These automatic summaries that pop up when you search may seem convenient, but there are a few major points to keep in mind.

What was once science fiction is now practically permeating every area of our lives. Artificial intelligence tools — like ChatGPT, Gemini, Grok and Meta AI — have been exploding in popularity over the last year. And while generative AI has its benefits, like that it can create original content in response to user queries, it can be hard to opt out of.

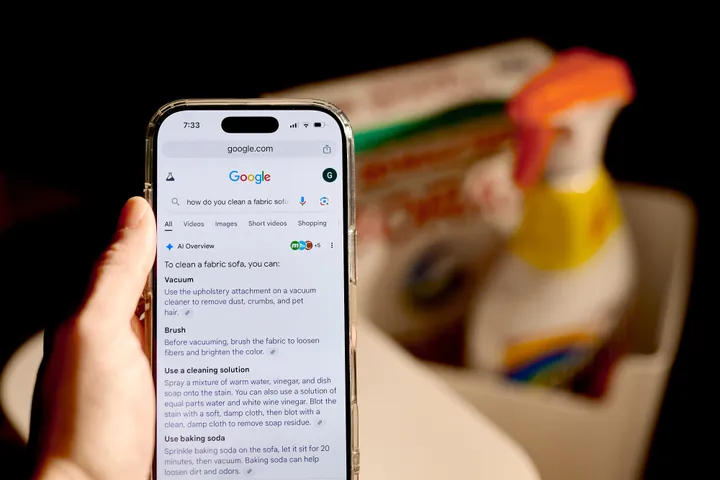

Take, for example, when you Google a question and get a hodgepodge of answers from Google’s AI Overview.

AI Overviews launched in May 2024. Google’s AI, Gemini, analyzes information from a variety of online resources and gives users a quick overview in response to their queries at the top of the results page. The company now offers AI Overviews in more than 100 countries.

Sounds great in theory, right?

AI Overviews sometimes culls information from sources that may not be verified or accurate, such as Reddit threads, and pushes reliable sources further down, resulting in a summary that can be wrong or even harmful.

“These AI-generated summaries are often unreliable and can feature outright incorrect or misleading information,” Andrey Meshkov, co-founder and chief technology officer of AdGuard, a company specializing in privacy and ad-blocking solutions, told HuffPost.

“For example, when searching for medical advice, the AI might generate even potentially dangerous results, like the infamous case of an AI Overview suggesting drinking urine to treat kidney stones,” he continued.

This is undoubtedly frustrating for users “who want to quickly access expert opinions and reputable sources without having to scroll past incomplete and often flawed AI summaries,” Meshkov said.

Yvette Schmitter, CEO and managing partner at the technology company Fusion Collective, also noted that AI Overview “isn’t always accurate.”

“If I’m searching for information, I don’t need potentially erroneous information presented to me first,” she told HuffPost.

“What happens if the AI summary ― which may or may not be responsive to sponsored search results ― doesn’t match the information that I’m seeing in the search results?” Schmitter asked. “Why is it different? What is right and what is wrong? Because these tools are entirely opaque, we have no way of knowing, which means the AI ‘feature’ adds no value.”

These answers are due in part to the fact that generative AI is “experimental and a work in progress,” according to Google. Sometimes, the AI Overview will invent an answer, called a hallucination, and sometimes it may misinterpret language (for example, telling you about baseball bats when you want to know about bats that live in caves).

The company encourages users to “think critically about the responses you get from generative AI tools” and report things that aren’t right to improve the experience for everyone.

There’s also the question of protecting your own data. Google’s AI uses user interactions, including what you search for and what feedback you give, to “develop and improve generative AI experiences.”

And Google’s Privacy Policy explains that the company collects different types of information from all users who utilize any of the company’s services, not just AI.

Still, some experts suggest extra caution. “None of us can afford to simply believe that companies — whose sole purpose is maximizing profits — will do the right thing for and by us,” Schmitter said, adding that there are “no rules governing the responsible, ethical use of AI” right now.

“We’re in the digital Wild West, with no sheriff, guidelines, rules, or regulations in sight,” she continued. “We’re all in for quite a ride over the next few years, so it’s crucial to put in the work now. Be proactive about controlling your data, who can use it, and who has access to it.”